Google Site Command does not return indexed pages

Wrong expectations of Site: Command

If you’re trying to see how many pages of your website are indexed by Google, the ‘site:’ command will not be very helpful.

The ‘site:’ command simply lists the URLs that Google is aware of.

Those are only known URLs.

This includes pages that are not necessarily indexed.

Known URL does not mean Indexed URL

Using the Google Site operator, you may get a lot of URLs back that are:

- known to Google but are redirected

- known to Google, crawled, but not indexed

- known to Google, crawled, but considered a duplicate and therefore not returned in the search results

How the SITE command is often used by SEOs

The Google site: command is often used to check if a certain URL is indexed by Google.

However, the Google site: command only lists the URLs known, not the URLs indexed.

This means that the Google site: command is not a good way to check if a URL is indexed.

To check if a URL is indexed, you should use the actual Google Search Engine itself.

You can also use other methods, such as the URL Inspection tool in Google Search Console or URLinspector.

Why are people using the Site: command to check for indexing?

There’s simply no other convenient way to check URLs known to Google.

The site command was also changed over the years.

Google Site Command circa 2005

In 2005 you would get a lot of known and indexed URLs back.

The URLs of the Site were also sorted descending by their PageRank.

That means you could use the site command to find the strongest pages on a website.

This was a handy feature, but it was, like many SEO features in Google, crippled at some point.

Since 2009 there has been the Strongest Sub Pages Tool](https://www.linkresearchtools.com/seo-tools/competitor-analysis/sspt/) in LinkResearchTools as a solution, to find the strongest pages on a website.

Google Site Command circa 2015

In 2015 we noticed that the Google site command was returning a lot more URLs than indexed.

We suddenly saw a lot of URLs from subdomains that we redirected, URLs that should NOT be returned in the search results.

This was the moment in time, and probably it started even earlier when the Google Site command started to return the “Known” URLs, not the indexed ones.

Now when you talk to OG SEOs, people that have been in the industry for a lot longer than since 2015, they may not have noticed the changed. They may still think that the site command returns the indexed URLs.

Example: The Google Site Command today

Today you can only use the Google site command to get a list of URLs that Google is aware of.

That is still very useful, but consider the following example:

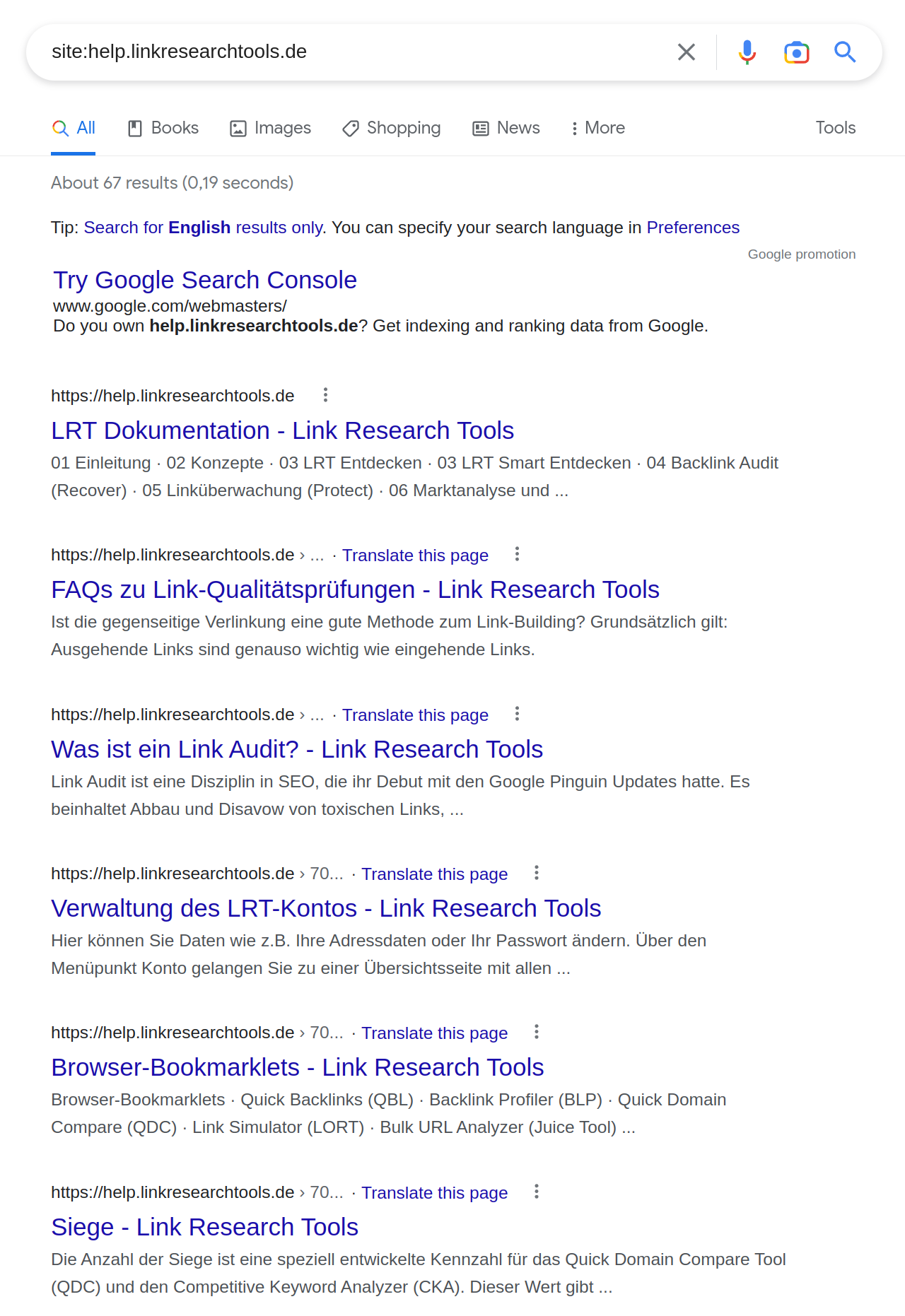

You can find an example pictured below with the search for site:help.linkresearchtools.de

The URL returned was a part of the German online documentation for LinkResearchTools and was hosted at this specific sub-domain ca. until 2018.

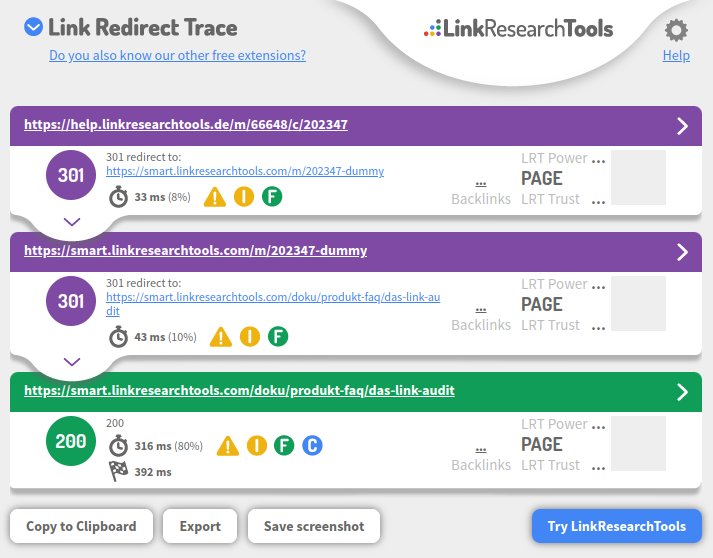

The resulting URL starts a redirect chain, even at the old http level of the platform used back then to https pages in a more recent CMS system.

As you can see, something is going on there. The diagnosis was made using the popular Redirect Trace extension.

And Google seems to have good reason to keep the original URL

https://help.linkresearchtools.de/m/66648/c/202347

in their URL memory (it’s not linked here, on purpose, because that would be an excellent reason to keep the URL in the memory.

Google will never return that URL in the organic search results, as it has been redirected to a new URL for a long time.

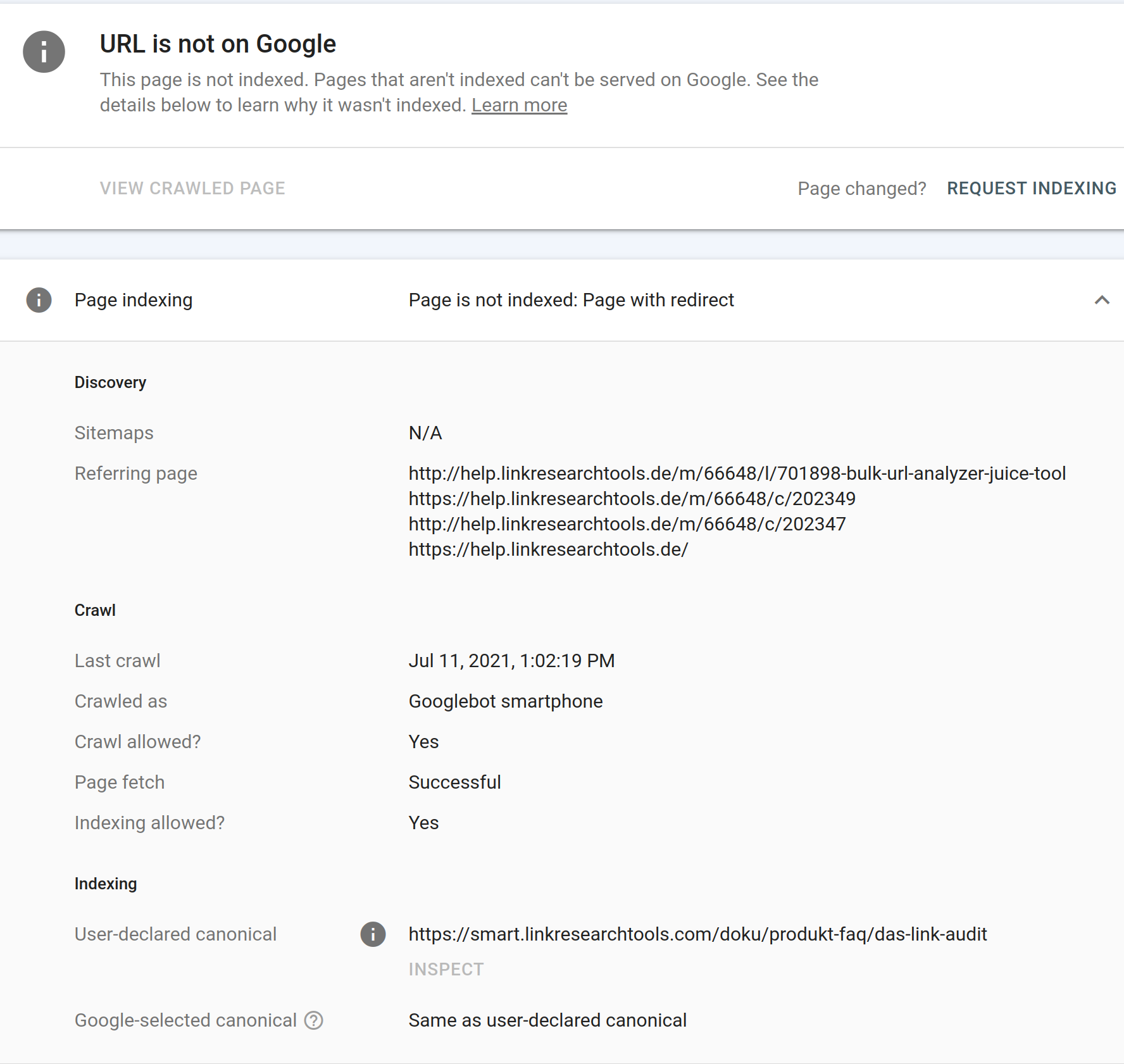

The Google Search Console confirms “URL is not on Google”

It is not on Google?

But we just found this URL

https://help.linkresearchtools.de/m/66648/c/202347

via the SITE command!

This URL was last crawled on July 11 2021, interesting.

We can say this “last crawl” is over 24 months after the 301 redirects on that URL were in place.

This brings back memories that you only have to leave your redirects up for one year or so, and then, it was said by a Google spokesman you can remove them.

When we look at the URL Inspection tool in Google Search Console, we can see that the URL is not indexed.

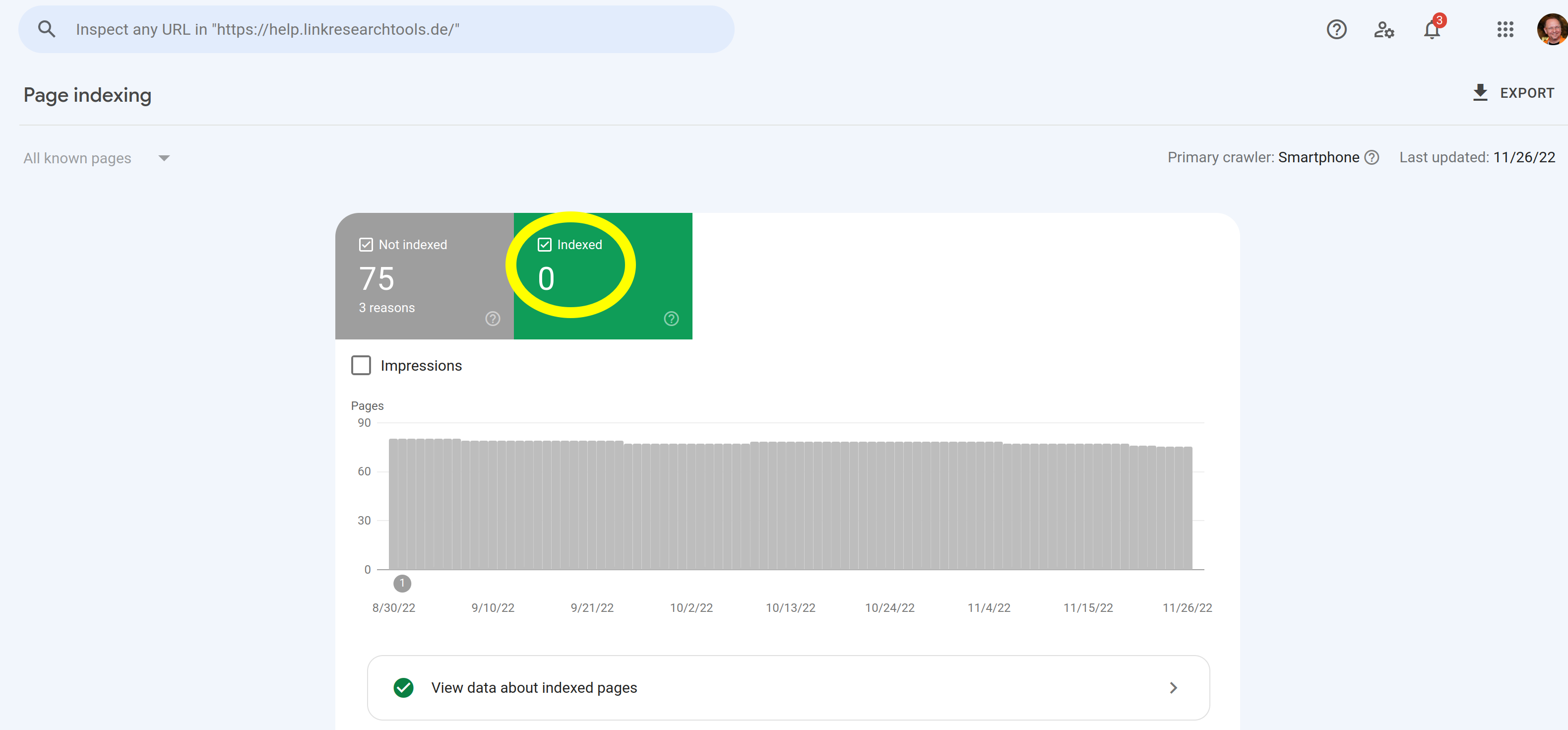

We also see that there are no indexed URLs on that subdomain at all.

The summary for the site in GSC also clearly says: 0 indexed.

Now when you scroll up, you see 67 results for the site command, do you?

Site Command an indicator for a “Useless URL Filter”?

A special case suggested is that the Site: Command would NOT list all URLs known by Google, but leaving some “Useless” filtered out behind.

If this is true, to be verified/tested, this special behavior by Google would give URLs that are listed in the Site: command a special meaning, a flag, that they are actually able to rank.

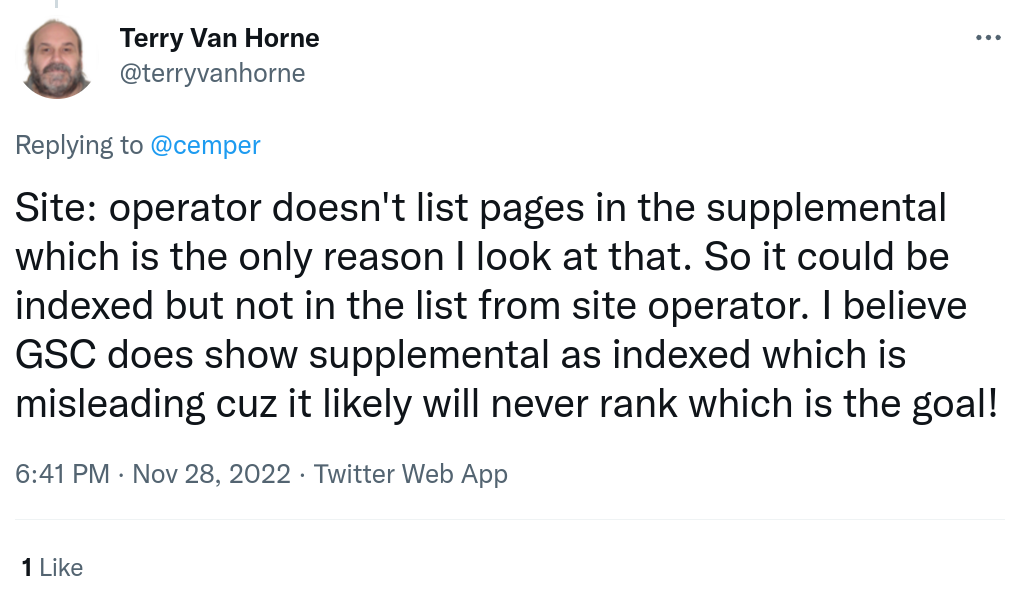

Great input by Terry van Horne

Not long we were reminded that the Supplemental Index was shown by a special label in the SERPs.

Both the indexing technology and the SERPS have changed a lot since then in all these years, and it’s certainly safe to say that Google does not use THE Supplemental Index - version 2007 anymore.

But of course, a search engine has many different buckets of URLs, and it’s possible that the Site: command would only return URLs from a bucket that is more important than just any trash URL ever seen.

That doesn’t invalidate the observation that only a portion of the URLs are returned by the Site: command, and that those seem to be more meaningful than the others.

Maybe the Site: command is a good indicator that the resulting URLs passed a couple checks and filters, and that would be valuable info to have for SEOs

We want to test and learn more regarding such a “Useless URL filter”.

What to use to find out if an URL is indexed by Google

There are multiple better ways to find out if an URL is indexed in Google.

Use Google Search itself

Search for a unique phrase from the content and see if that page returns.

You will receive the best page Google thinks it has if you have multiple canonical versions of the page.

You will NOT receive your content back if you have some penalty.

Use Google Search Console and Inspect URL

You can use the Google Search Console feature and “Inspect” your URL.

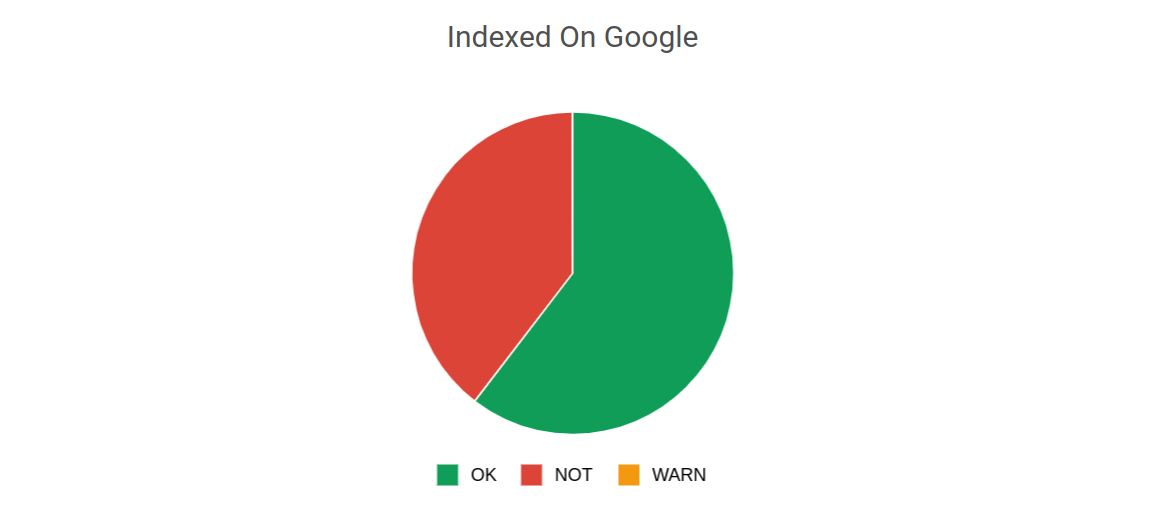

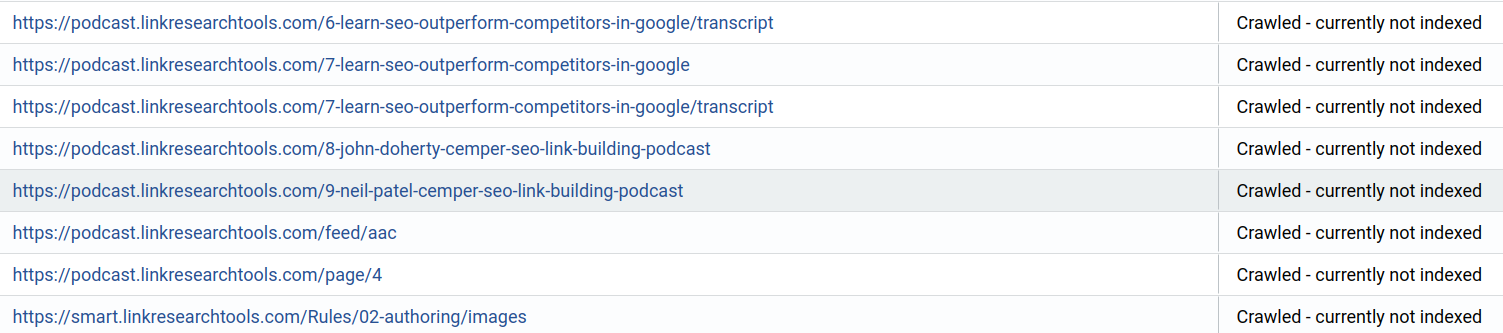

Use URLinspector for hundreds or thousands of URLs

URLinspector uses the Google Inspection API and gives you an immediate, up-to-date status for all your URLs.

You can see immediately how many URLs of your Site are NOT indexed, click on the red area and get a list of all these.

These URLs may or may not be important to you, but they all come back in the SITE command,

and you should be aware that they are not indexed.

Conclusion

You cannot use the SITE command to find out if an URL is indexed or not.

URLinspector does that check automatically for you, for the whole Site.

URLinspector shows you live data, while Google Search Console (GSC) has up to 3 days of delay.

You get the status of Google for your website the same day, even during the day, not with days of delay.

Why not give it a try?

You can setup a free trial for 14 days with URLinspector, and see how it works for you.