How to find Indexed URLs that Google is blocked from in Robots.txt

![Don’t make the mistake of blocking an indexed URL in robots.txt]

- Don’t make a mistake of blocking an indexed URL in

robots.txt - URLinspector shows you exactly where that happened - based on the data Google bot itself provided.

- If you want an URL to get de-indexed, use the

noindexattribute.

How to spot this tech SEO problem in URLinspector

When you see the status Indexed, tough blocked by robots.txt in URLinspector, then your website may be in danger.

This first indication of already indexed pages now being blocked in robots.txt can cause you to lose all rankings over time.

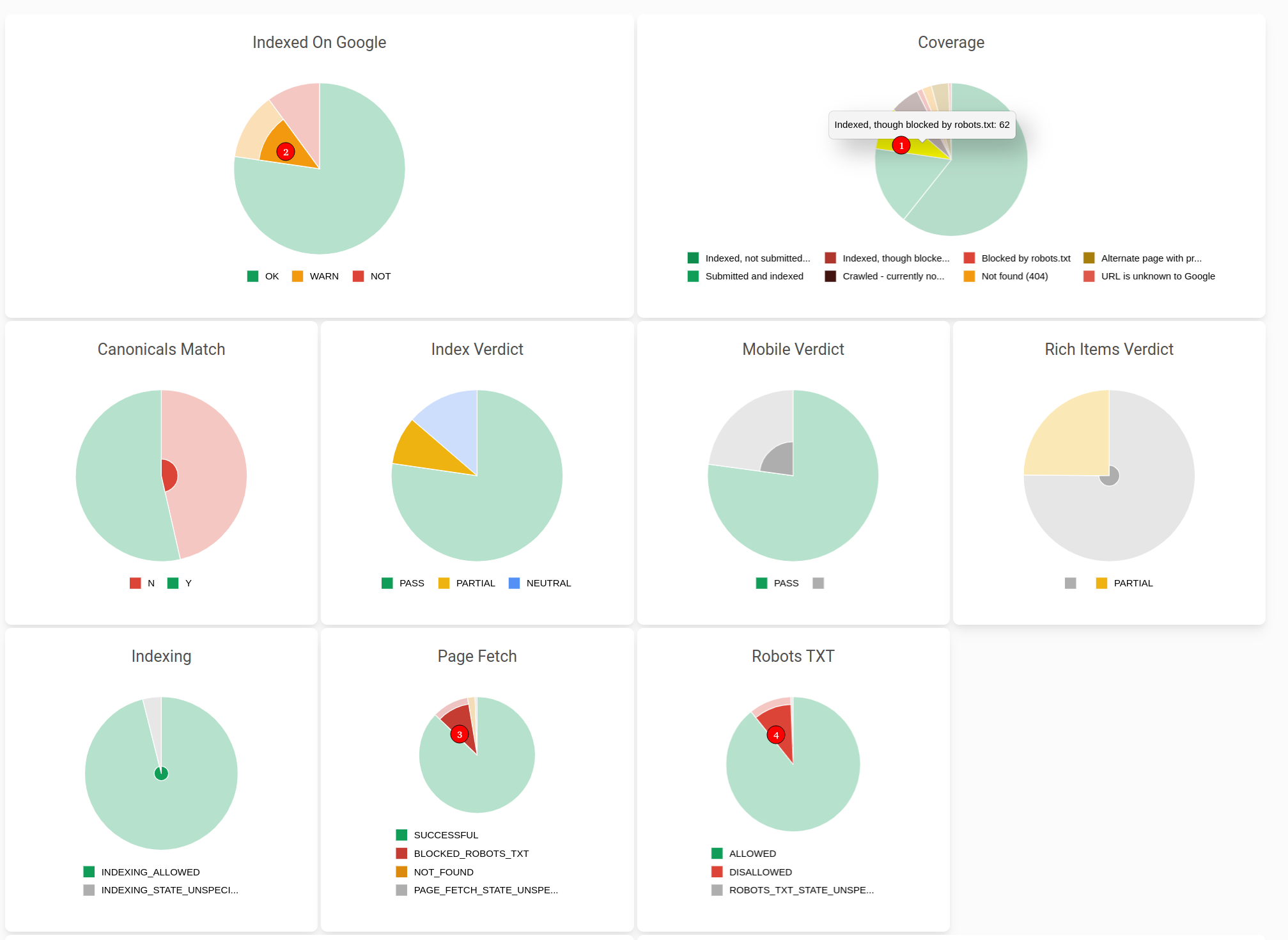

Take a look at the screenshot below:

We see:

(1) coverage graph “Indexed, though blocked by robots.txt”

(2) also a WARN in the “Indexed by Google” chart

(3) status “Blocked Robots.txt” on the chart “Page Fetch” (the result of page fetching by Googlebot)

(4) status “DISALLOWED” in the Robots TXT status chart.

Note: when you click on those charts in the URLinspector app, you get a full break-down in a table like here.

Context of this robots.txt blocking Google from indexed URLs

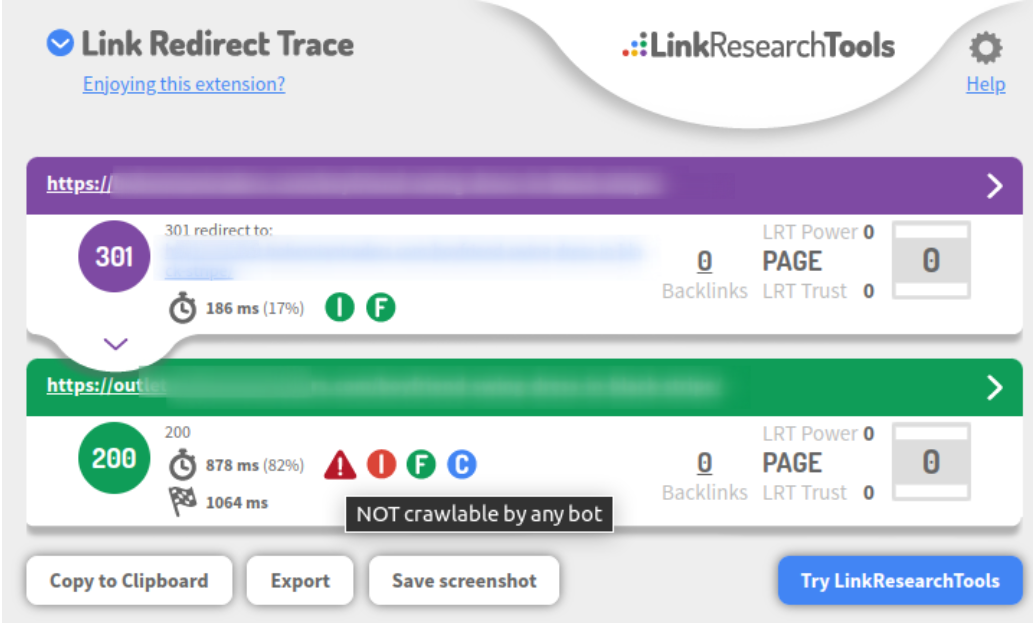

The problem is, that this e-Commerce store has some www URLs now redirect to a sub domain that has bots blocked.

We we can see this on a single URL basis in the popular free browser extension Link Redirect Trace as well, clearly.

So we are seeing the www URLs getting de-indexed from the URLs getting redirected to the blocked bot area.

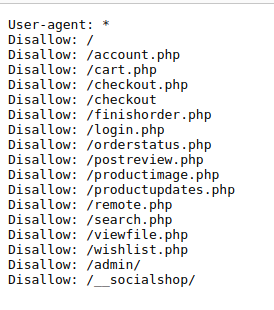

When we look at the robots.txt file of the sub-domain we see how it blocks all URLs in the first two lines already

User-agent: *

Disallow: /

What was probably desired here, was to block the root URL “/”.

Unfortunately, that is not how robots.txt works.

The fix for this Google SEO problem

The solution is quick and easy:

- Remove the block for all URLS - i.e. “Disallow: /”

- Wait for Google to recrawl all the URLs, or perform a request to index them manually

If you want to get an URL de-indexed in Google, you need to use the noindex attribute.

What is your Google indexing problem?

Let us know by tweeting @URLinspector and we’d be happy to cover it in an upcoming post.